Why CRA Proficiency Needs A Boost From Better Assessment And Training

By Gerald DeWolfe, CRA Assessments

Clinical Research Associates (CRAs) monitoring clinical trials can be considered one of clinical research’s original commitments to patient centricity. With it, the industry shows its commitment to protecting patients’ rights and welfare by ensuring compliance with the study protocol and applicable regulations while also ensuring accurate and reliable data. Is our industry truly delivering on its commitment to deploying CRAs with the appropriate level of knowledge, skills, and abilities (KSAs) to monitor trials? Based on 10-plus years of comprehensive and objective data collected by CRA Assessments, the answer is a resounding “no.”

Too Many CRAs Can’t Identify Critical And Major Issues

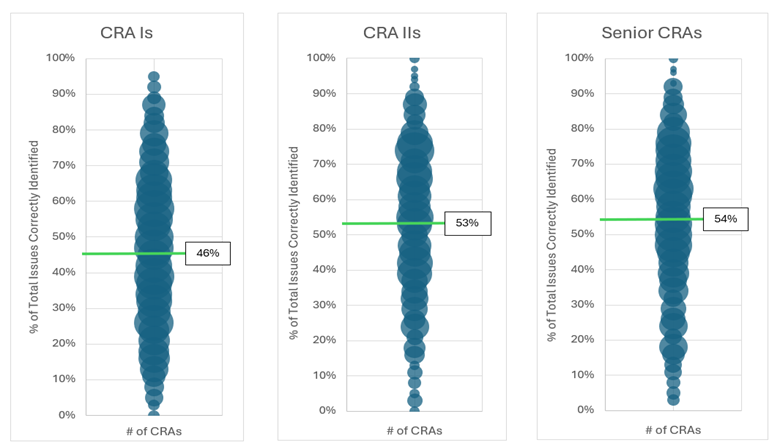

Data collected using a monitoring visit simulation across thousands of CRAs shows that the average CRA can only identify approximately 50% of the critical and major issues in a virtual monitoring visit. The data also shows significant variability within the commonly used CRA titles (CRA I, CRA II, and Senior CRA).

The Method For Evaluating CRA KSAs Is The Problem

For the past 30 years, the primary method for evaluating CRA KSAs has been the oversight monitoring visit process. This visit can occur after a CRA starts with a company and then is generally a yearly evaluation in which an more experienced CRA or manager attends a site monitoring visit to observe the CRA conducting activities such regulatory document review, informed consent form process review, SDR, and SDV. Unfortunately, there are serious flaws with this approach as a primary evaluation and development method:

- Every CRA is evaluated based on the site monitored and the issues or non-issues present during a single site visit. Most visits do not enable a comprehensive KSA evaluation. Therefore, every CRA is evaluated under a different set of circumstances.

- Each CRA evaluator is different, and there is no standard evaluation approach. Paired with differences in site experiences noted above, the evaluator’s post-visit report can’t be compared across a population of CRAs.

- There is generally no standardized follow-up process for assigning additional training, oversight, or remediation. Most companies leave that responsibility to line managers or those in a similar position.

While some CRAs are correctly evaluated and given valuable feedback, the data presented above shows there are significant deficiencies in CRAs’ KSAs that often go undetected when using this traditional evaluation method.

How Is A Monitoring Simulation Process Different?

During the initial KSA evaluation, CRAs complete a comprehensive virtual site monitoring visit in which they first learn a protocol and an abbreviated monitoring plan. Once they start the simulation, the CRAs have access to all documents and/or drugs at the virtual site consistent with those present at an actual monitoring visit (source documents, informed consent forms, regulatory documents, EDC, study logs, and drug accountability). The average time to complete the virtual site visit is approximately six hours, consistent with a normal one-day monitoring visit.

The CRA determines how the site is monitored, and their process determines the documents they review, in which order, for how long, what documents they compare, and the monitoring notes made to address issues or communications they direct to site personnel. The monitoring notes are then compared to the embedded issues within the simulation to determine which issues have been correctly identified. Finally, the CRAs receive feedback on any issues they did not correctly identify.

The simulation contains critical and major issues in Source Data Review (SDR), Source Data Verification (SDV), the Informed Consent process, IRB/IEC Submission and approval documentation, and potential fraud, scientific misconduct, and delegation of authority. The scoring of the simulation is based on the percent of total issues correctly identified.

Examples Of Proficiency Concerns

Figure 1 shows the average % Total Issues Correctly Identified (green line) during the virtual monitoring visit and the distribution of scores based upon CRA Title. This data represents over 1,000 CRAs with a similar proportion of CRA Is, CRA IIs, and senior CRAs and with global distribution.

Figure 1: % of Total Issues Correctly Identified in the Initial KSA Evaluation Stratified by CRA Title

The individual scores range from 100% to 0%. The average across all 3 CRA titles is 51% Total Issues Correctly Identified for this group of CRAs. The averages and distribution are unacceptable based on the key role CRAs play in the industry’s commitment to patients. Interestingly, the senior CRAs have three times as much experience as the CRA IIs, but they have the same average % Total Issues Correctly Identified. While CRA IIs do score higher than the CRA Is, the difference is not meaningfully different. The data suggests that years of monitoring experience is not a good indicator of a CRA’s KSAs. On the positive side, the data also shows CRAs in all three groups that perform very well on the simulation.

To further illustrate the severity of the situation, take data from the SDR and SDV domains showing how CRAs perform at identifying missing adverse events (AEs) from the source documents and EDC. In the simulation, the CRA must first identify that an AE occurred and was not evaluated by the investigator in the source documentation, then they verify the AE was not captured in the EDC. The simulation used for this example contains three AEs that are missing from the source documentation and EDC. The data shows that 45% of all CRAs could not identify any of the missing AEs, and only 7% of CRAs could identify that all three AEs were missing from the EDC.

What Level of CRA Proficiency Do Patients Deserve?

With the responsibilities designated by ICH guidelines for CRAs, what level of proficiency should CRAs demonstrate? Based upon ICH guidelines, CRAs should have a mastery of their position. Yet, there must be a progression from no experience to mastery. However, the data for CRA Is shows that too many CRA Is are being released to complete independent monitoring visits without an acceptable level of KSAs. The data also shows that the average KSAs for CRA IIs and Senior CRAs are also unacceptable. These CRA IIs and Senior CRAs would have progressed from a CRA I title, and the data suggests additional experience did not significantly increase their proficiency.

What Can and Should Be Done By Industry?

The release of ICH E6 (R3) provides a perfect framework and impetus for pharmaceutical/biotechnology companies to reevaluate their CRA providers’ methods for ensuring the KSAs of CRAs. The EU effective date is July 23, 2025, and other countries will likely follow over the next year. Sponsor companies should rethink their monitoring efforts, including incorporating a monitoring simulation, to meet key items in ICH E6 (R3), Section 3:

- 3.4 Qualification and Training: The sponsor should utilize appropriately qualified individuals for the activities to which they are assigned (e.g., biostatisticians, clinical pharmacologists, physicians, data scientists/data managers, auditors and monitors) throughout the trial process.

- 3.6.7 A sponsor may transfer any or all of the sponsor’s trial-related activities to a service provider; however, the ultimate responsibility for the sponsor’s trial-related activities, including protection of participants’ rights, safety and well-being and reliability of the trial data, resides with the sponsor. Any service provider used for clinical trial activities should implement appropriate quality management and report to the sponsor any incidents that might have an impact on the safety of trial participants or/and trial results.

- 3.6.9 The sponsor should have access to relevant information (e.g., SOPs and performance metrics) for selection and oversight of service providers.

- 3.6.10 The sponsor should ensure appropriate oversight of important trial-related activities that are transferred to service providers and further subcontracted.

A more comprehensive and objective model for evaluating CRA proficiency needs to be implemented to ensure that the monitoring of clinical trials delivers on the commitment made to the patients.

About The Expert:

About The Expert:

Gerald DeWolfe has been evaluating CRAs using a monitoring simulation for the past 12 years. The driving force for the creation of the simulation was his previous 14 years in the CRO industry as a CRA, project manager, and project director.