Automation: From Protocol Definition To Submission

By Brian Hermann, senior director – global clinical data integration, Narayanrao Pavuluri, senior director, global clinical data integration, and Donald Thampy, executive director – global clinical data integration, Merck

Clinical trial data plays a crucial role in the development and execution of clinical trials. Clinical trial data is collected, transformed, and analyzed to meet regulatory requirements and support decision-making. Ensuring data integrity and lineage is essential for traceability as data moves through different processes and systems.

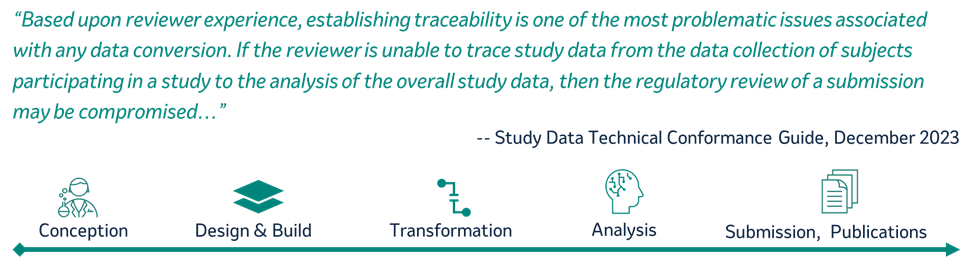

The FDA recently highlighted its challenges with establishing traceability when reviewing marketing applications to verify the integrity and lineage of the data and documentation as part of the application review process. The FDA reviewer’s ability to trace study data from the point it’s collected to the point of overall study data analysis for regulatory review is a critical area of evaluation.

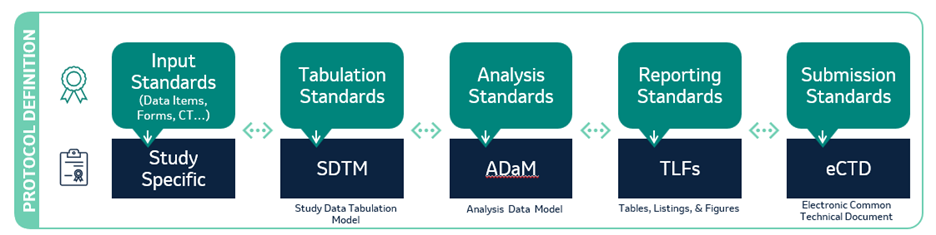

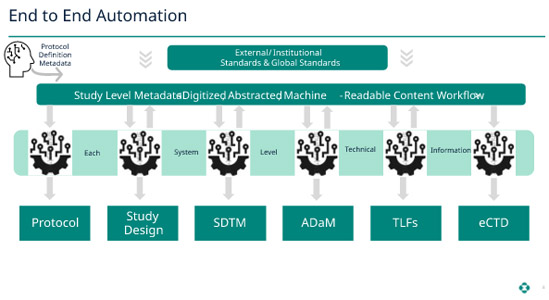

While the FDA has shared insights into this challenge, the industry is also trying to mitigate this challenge by leveraging existing metadata from protocol definition and data standards to submission. The clinical data flow below underpins the present-day challenge in the data compilation process. The concepts outlined through the remainder of the article serve as opportunities for automation within this ecosystem.

COMMON THEMES SETTING THE STAGE FOR AUTOMATION OPPORTUNITIES

- STANDARDS applied to DATA elements in a continuous state of transition

- Derivations & TRANSFORMATIONS expendable to only specific outputs

- Opportunities to associate METADATA enabling reuse… DATA IN MOTION

Study Development Process

While it is extremely difficult to provide all the technical details in this short article, the aim is to show fundamental concepts of maintaining end-to-end lineage and separating data transformation logic from programming and automating these processes of clinical data journey and the gains we can make as an industry. Most notably, one advantage is maintaining the lineage of the data from collection through submission.

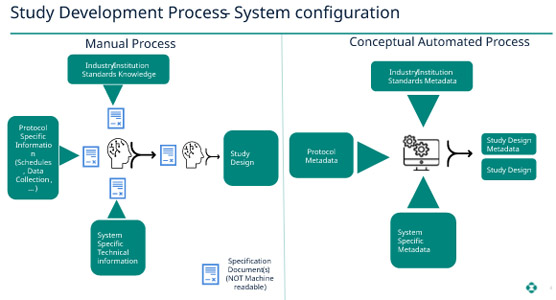

Study Definition/Design

Currently, most, if not all, specifications from protocol through SDTM (Study Data Tabulation Model), ADaM (Analysis Data Model), and submissions are defined in documents we call specifications, and then we convert those into programs that are in the native systems we use for processing (electronic data capture [EDC], “extract, transform, load” [ETL], SDTM, ADaM, etc.). For example, to design a study, one needs to understand the protocol document, system-specific technical information, and industry/institutional standards. This knowledge and experience need to be synthesized and articulated in a study development document to define study data collectors in the EDC and clinical data management system (CDMS). The central premise is to move from a document mindset to content converted into a digital, machine-readable format. Then we have all the elements to start a study in an EDC system, i.e., protocol, technical, and standards information are digitized into machine-readable formats. Thus, the study design can be automated and begin to transform our current ways of working.

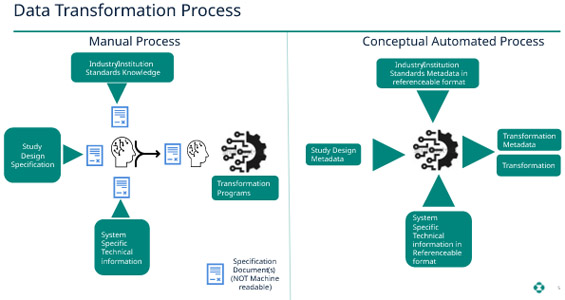

Data Transformations

When transforming clinical data to standardized SDTM (or any other transformations needed for stakeholders, such as statisticians, medical monitors, and adverse event monitors), the study definition is mostly available in machine-readable format. However, the standards knowledge as well as the systems-specific technical knowledge is not digitized. Furthermore, the transformation programs are generally embedded with the transformation logic. Consequently, this constraint creates limited value in the end-to-end process and leads to challenges in addressing inquiries by auditors and inspectors related to where and how transformations occur in the process.

We propose to digitize and move the transformation logic into the metadata definitions so that the transformation programs understand and transform the data as needed. Since the logic is now in metadata, it is much easier to extract and understand during auditing and inspections. As an added advantage, if a transformation logic needs to be modified, only the metadata needs to be modified. All the changes to the metadata are captured in an audit trail that can be extracted at any time. This method will provide easy access to the lineage of the data as well as a full audit of the changes. Going through the logic of programs is a cumbersome process. But by adapting this process, we eliminate the manual process of writing the specifications and programs and replace it with fully transparent auditable, and automated transformation processes.

Bringing It All Together

The above-explained process can be followed for all subsequent steps of the transformation to ADaM to tables, listings, and figures (TLF) and through submission. Each step generates the proper metadata providing the lineage of data items from collection through submission.

Now, when the submission package is compiled, this metadata (which has been maintained and preserved along the data life cycle) can be used to create hyperlinks in the submission package, such as an electronic Common Technical Document (eCTD). The reviewer can use these hyperlinks to navigate through the submission. For example, they could traverse the lineage from the application summary to the individual data item and how it transformed and changed as applicable. This ability will provide reviewers with a much more user-friendly review environment and optimize a more efficient review process.

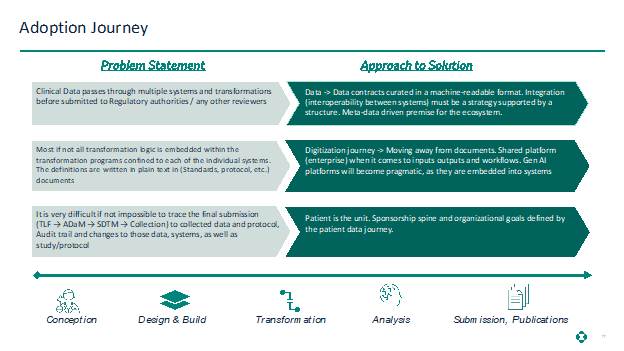

Adoption Journey

As we have outlined in the new working model above, we need to consider how we might achieve this solution. These considerations are outlined in the below illustration.

The critical challenge for industry emerges from the growth (sometimes overgrowth) and maintenance of these complex technology ecosystems and our inability to rein in key principles around data, including data ownership, data digitization, streamlined organizational structures, and resilient strategy and structure change.

3 Key Takeaways & Upsides For Automation

- “Data in movement” makes data ownership challenging. Therefore, defined data contracts that are curated at the metadata level solve for traceability of the data movement, ownership, and reuse of digitized machine-readable formats.

- Once we transition from a document mindset to the digitized and metadata mindset, we then have the potential to both lower the cost to wrangle change, given the changes can be subjected to automated impact analysis, and the ability to propagate the change to multiple layers/paradigms of systems or integrations. And this sets up opportunities to consider the application of cutting-edge capabilities and techniques using ML, AI, and Gen AI.

- Oftentimes in the busyness of getting to goals, we forget the purpose of our existence as a pharmaceutical community. We are here for the patients. We as an industry continually need to think end-to-end in our endeavors, and it comes with the need for a strong sponsorship spine driven by organizational goals that tie outcomes to the patient data journey. Organizational unity is achieved by striving for specific outcomes, aligning cross-functional processes, and effectively leveraging technology. These efforts will strengthen the ability to adapt to change by fostering a culture of continuous learning, thoughtful innovation, and purposeful action. Ultimately, this approach generates value for patients, strengthens organizations, and enhances satisfaction among teams.

About The Authors:

Narayanarao “Rao” Pavuluri is passionate about bringing configurable and adaptable systems into clinical research, automating manual processes, improving quality, and bringing efficiencies to end-to-end data flow and processes. He has been working in clinical development for more than 30 years at various levels starting from lab information systems and data management systems through submissions.

Brian Hermann brings a deep and broad understanding of the development, analysis, and compliance of clinical trial data to support regulatory submissions. Brian’s most recent experience includes leading operational teams and successful implementations to support strategic transformational initiatives specific to data management, statistical programming, and data science needs.

Donald “Don” Thampy is driven by a deep passion for bringing innovative therapies to patients. He actively catalyzes this vision by leveraging the thought capital of individuals and partners, as well as their technological assets and innovative frameworks. Through this collaborative approach, he strives to deliver value-based solutions that are meaningful and impactful for both patients and the organization.