Best Practices For Evaluating The Relevance And Quality Of RWD

By Boshu Ru and Weilin Meng, Real-world Data Analytics and Innovation, Merck Research Laboratories

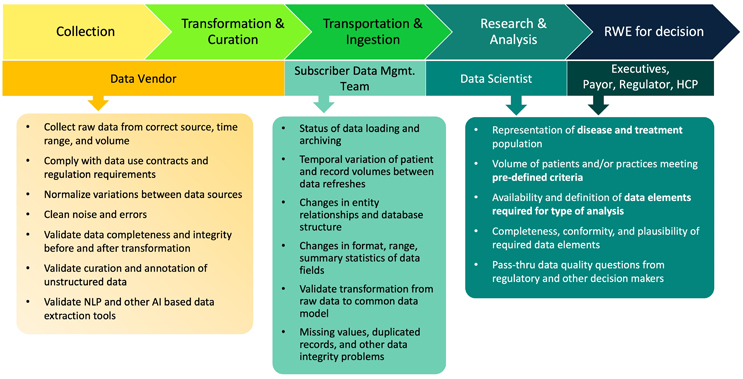

The value of real-world evidence (RWE) derived from real-world data (RWD) has been demonstrated over the past decade in a broad range of biopharmaceutical applications including marketing strategy, clinical trial optimization, label expansion, and payer approval. Increasing recognition and acceptance of RWE has fostered a large market for RWD, with four-fifths1 of pharma companies actively investing, hundreds of RWD available for commercial use, advances in health information technologies, and regulatory guidance in major global regions. The most commonly used RWD — insurance claims and electronic health records (EHR) — were collected for administrative purposes (the “primary use”). Before using them in an RWE study (a “secondary use”), raw data need to go through multiple steps and various stakeholders must be involved in the workflow (Figure 1), where quality of data can be compromised in data collection, transformation and curation, and data ingestion.

Figure 1 Key steps, stakeholders, and data quality concerns in the workflow of RWE generation

Academia and processional communities have made many efforts to improve the quality of RWD. Data quality checks and tools were built for finding potential issues at the database level, while quantitative methods have been explored for automation at large scale.2,3 Data exchange protocols (HL7, FHIR) and common data models (OHDSI, PCORnet, Sentinel, i2b2) were adopted to improve interoperability. Foundations and guidelines have been published by pioneer research groups,4,5 followed by regulators.6,7 However, we are still far from having RWD with perfect quality to support all RWE needs. In day-to-day operation, we need to determine if the quality of a RWD product meets requirements for specific diseases, treatments, and outcomes (i.e., “use case”).

This article will introduce how data scientists from the Real-World Data Analytics and Innovation team at Merck applied the Use-case specific Relevance and Quality Assessment (UReQA), a fit-for-purpose RWD quality framework, to assess and select high-quality and relevant data for RWE studies. The framework was recently published8 in the Value & Outcomes Spotlight magazine by ISPOR and has been applied in the assessments of over 20 oncology RWD.

How Does Merck Evaluate The Relevance And Quality Of RWD?

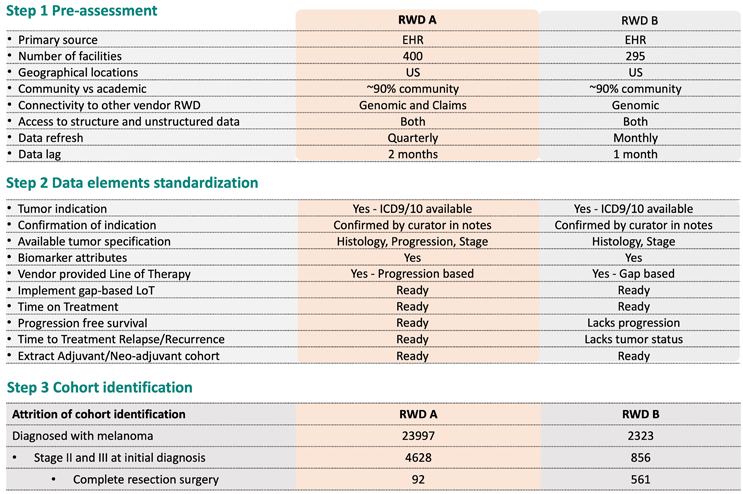

For a quick recap, the UReQA framework assesses the relevance and quality of RWD in five steps. The first three steps check if the RWD product is relevant to the use case (Figure 2), and the other two steps focus on data quality.

We first ask RWD vendors to answer a predefined list of questions regarding high-level characteristics of the data. How well the source data represents the disease population and treatment landscape are often the top interest. Data refresh frequency and lag are also important, particularly for use cases on relatively new indications and treatments. The second step is data elements standardization. We check whether all data elements required for generating the RWE are available using the data dictionary and other reference documents from the data vendor. We also review the business rules, operational definitions, and algorithms used to auto-curate certain clinical variables. The third step is to define a cohort that minimally meets the research need and then apply the data to estimate the relevant patient volume. Results are often reported in a data attrition table to address the gap of relevance in the current RWD.

Figure 2 Example of RWD relevance assessment

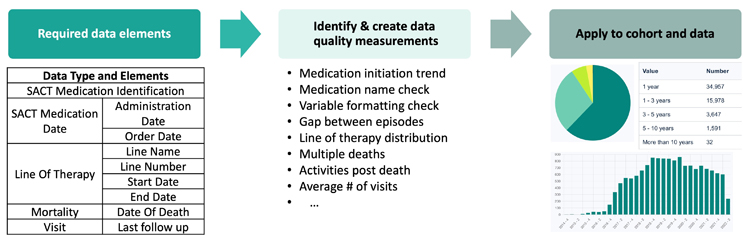

The fourth step is verification and validation. We design quantitative data quality checks to measure the completeness, conformity, and plausibility for each required data element (Figure 3) and implement them on the real data from the vendor for patients in the cohort defined in the third step. Ideally, the validation of measurements can be determined using a gold standard RWD product. In the absence of such a product, internal and external disease and treatment knowledge may be utilized. For example, the completeness of mortality records may be compared against the published survival rates among the patient population. Temporal patterns of medication utilization can be validated by the regulatory approval timeline. The final step is to benchmark RWD products against each other using quantified quality assessment results and recommend RWD products for each use case.

Figure 3 Example of data quality verification and validation measurements for oncology in real-world time to treatment discontinuation

What Do We Learn In Data Quality Assessments?

Our team applied the UReQA framework to assess data quality of over 20 oncology RWD products in different tumor indications, cancer stages, and geographical regions. Over half of the data quality assessment stopped at the cohort identification step for not having enough patients meeting the minimum inclusion criteria. Only one-fourth of RWD products remained after data quality verification and validation checks. Here are key lessons we learned:

- RWD sourced from nationwide clinical practice and major insurance payer data often offer a large volume of patient data with good representation of the overall disease population.

- RWD sourced from a single or a few large clinical institutes has the potential to capture a more comprehensive image of the patient treatment trajectory.

- Many data elements for oncology RWE research need manual or automated curation from raw EHR data. Knowing the details of rules and algorithms used in the curation is important to determine the quality of RWD, but such information was absent in some vendors’ data dictionaries and supporting documents.

- More high-quality RWD products are available for studying treatment patterns in advanced and metastatic stage cancer indications. However, the quality of tumor status (histology, progression, and recurrence), biomarker, performance score, laboratory testing results, and mortality data in many RWD products are suboptimal. There are also gaps in RWD to support RWE generation for primary, neoadjuvant, and adjuvant treatment in the early-stage cancer.

- In some assessments, what we read from marketing materials and data dictionaries was different from what we found in actual data. This happened more often than we expected. On the one hand, this highlights the necessity of conducting data quality assessment on actual data before licensing decisions. On the other hand, some vendors need to improve knowledge sharing and alignment between client facing, data engineering, and clinical curation teams in RWD product delivery.

- Many vendors pledged to improve the completeness and plausibility of the RWD in future data releases. The conformity of RWD is also important to improve interoperability and ease of use. However, this perspective of data quality did not receive the same level of attention.

How Can We Improve RWD Quality Together?

Conducting the full-set data quality assessments above can be time consuming, but the return in the long run is worthwhile. We can avoid subscribing to or selecting low-quality or less relevant data for RWE study and allocate more resources to RWD products that are ready for RWE generation. We also believe that a cross-stakeholder collaboration is important to increase availability of relevant and high-quality RWD for more use cases. Here are areas we suggest considering:

- Iterative evolution of use-case specifications. We need to collaboratively learn changes in the research requirements and external data quality efforts and adjust the specifications accordingly.

- Sharing definitions of use-case specifications, including relevant quality thresholds and identification of benchmarking resources for validation strategies.

- External transparency of RWD quality findings. This includes data vendors’ consent and support of publishing data quality assessment results, especially for critical ones. This is particularly important to build a robust and reliable RWD ecosystem.

- Partnership between data vendors and pharma and sponsors. Sharing the cost and benefits of identifying and resolving data quality issues can boost in-depth and long-term collaboration.

This article follows Boshu Ru’s presentation on the same topic at SCOPE 2022.

Disclaimers: The opinions expressed in this feature are those of the authors and do not necessarily reflect the views of any organizations.

References

- Databricks. White paper - Realizing the value of real-world evidence. FIERCE Pharma. May 2022. Available from: https://www.databricks.com/p/whitepaper/realizing-the-value-of-real-world-evidence

- Estiri H, Klann JG, Murphy SN. A clustering approach for detecting implausible observation values in electronic health records data. BMC Med Inform Decis Mak 2019;19(1):142.

- Estiri H, Murphy SN. Semi-supervised encoding for outlier detection in clinical observation data. Comput Methods Programs Biomed 2019;181:104830.

- Kahn MG, Callahan TJ, Barnard J, Bauck AE, Brown J, Davidson BN, et al. A harmonized data quality assessment terminology and framework for the secondary use of electronic health record data. EGEMS (Wash DC) 2016;4(1):1244.

- Mahendraratnam N, Silcox C, Mercon K, Kroetsch A, Romine M, Harrison N, et al. Determining real-world data’s fitness for use and the role of reliability: Duke-Margolis Center for Health Policy. Duke-Margolis Center for Health Policy; 2019; Available from: https://healthpolicy.duke.edu/publications/determining-real-world-datas-fitness-use-and-role-reliability.

- U.S. Food & Drug Administration (FDA). Real-world data: Assessing electronic health records and medical claims data to support regulatory decision-making for drug and biological products. September 2021; Available from: https://www.fda.gov/media/152503/download.

- U.S. Food & Drug Administration (FDA). Submitting documents using real-world data and real-world evidence to FDA for drug and biological products; Guidance for industry. September 2022; Available from: https://www.regulations.gov/document/FDA-2019-D-1263-0014.

- Desai K, Chandwani S, Ru B, et al. Fit-For-Purpose real-world data assessments in oncology: a call for Cross-Stakeholder collaboration. Value & Outcomes Spotlight 2021; 7:34–7. Available from: https://www.ispor.org/publications/journals/value-outcomes-spotlight/vos-archives/issue/view/expanding-the-value-conversation/fit-for-purpose-real-world-data-assessments-in-oncology-a-call-for-cross-stakeholder-collaboration

About The Authors:

Boshu Ru is an Associate Director of Outcomes Research and Data Science of the Real-world Data Analytics and Innovation team at the Merck Research Laboratories Center of Observational and Real-world Evidence. He is contributing to multiple fit-for-purpose oncology RWD quality assessments and is supporting RWE generation for cardiovascular, metabolic, and oncology therapeutic areas. Boshu received his Ph.D. training in the major of computing and information systems and he is active in RWD quality and healthcare data mining research.

Boshu Ru is an Associate Director of Outcomes Research and Data Science of the Real-world Data Analytics and Innovation team at the Merck Research Laboratories Center of Observational and Real-world Evidence. He is contributing to multiple fit-for-purpose oncology RWD quality assessments and is supporting RWE generation for cardiovascular, metabolic, and oncology therapeutic areas. Boshu received his Ph.D. training in the major of computing and information systems and he is active in RWD quality and healthcare data mining research.

Weilin Meng is a Senior Director of Outcomes Research and Data Science in the Real-world Data Analytics and Innovation team of the Merck Research Laboratories Center of Observational and Real-World Evidence. His background is in Mathematics and Operations Research and has a focus area of interest in applying machine learning and natural language processing to healthcare and social media data. Weilin has also made contributions in oncology real world studies in the form of algorithms, methodologies and predictive modeling tasks.

Weilin Meng is a Senior Director of Outcomes Research and Data Science in the Real-world Data Analytics and Innovation team of the Merck Research Laboratories Center of Observational and Real-World Evidence. His background is in Mathematics and Operations Research and has a focus area of interest in applying machine learning and natural language processing to healthcare and social media data. Weilin has also made contributions in oncology real world studies in the form of algorithms, methodologies and predictive modeling tasks.