Tufts & PACT Release New DCT Analysis

By Dan Schell, Chief Editor, Clinical Leader

PACT is not an acronym I was familiar with when I received an email the other day outlining some new DCT research. Then again, there are so many acronyms in clinical research — and new ones cropping up all the time — that it’s not uncommon for me to be Googling “What does XYZ mean in clinical research.” In this case, the answer was the Partnership for Advancing Clinical Trials, a consortium facilitated by the Tufts CSDD that consists of 30+ pharma and bio companies, CROs, and service providers that launched in 2023.

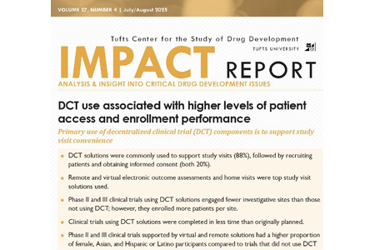

PACT is all about DCTs, and this new research was a Tufts “Impact Report” focused on a survey of sponsors and CROs that had experience with DCT solutions supporting 69 ongoing and recently completed clinical trials.

Before I dug into the research, I reached out to Ken Getz at Tufts (Ken is also a member of the Clinical Leader Editorial Board) to find out a little more about these Impact Reports and PACT, in general. “We’re averaging two to three white papers and impact reports on DCT use and experience every 12 months,” he said. “We are routinely collecting new data on the use and impact of DCT solutions from various projects.” As an example, he cited another recent Impact Report that discussed perceptions of 301 patients and 160 investigator sites on the delivery of IP directly to a patient’s home from a research center or local warehouse.

THE DTRA-PACT CONNECTION

One acronym-labeled clinical trial organization I do know about is DTRA (Decentralized Trials & Research Alliance), so I wondered if there was a crossover of initiatives between the two entities. Getz explained that PACT had been “gathering empirical evidence and sharing our findings with the DTRA community.” Indeed, as I perused the PACT website, I did find a helpful video from the 2024 DTRA Annual Conference featuring a presentation by Zak Smith, assistant director, data science and analytics at Tufts CSDD. I even reached out to another Clinical Leader Editorial Board Member, Craig Lipset, who is the co-chair of DTRA. He explained that, “We did not want to do any redundant work, and so we provided PACT with our frameworks and definitions.” Lipset also explained that when it comes to gathering data on DCTs, it sometimes can be challenging “Sponsor CTMS’ have the capability to track DCT elements, but most sponsors have not established a process for those fields to be owned and entered.”

THE 3 DATA POINTS THAT GRABBED MY ATTENTION

OK, let’s get to that research. The first thing I looked up was the source of the data, which was from 14 PACT member organizations and included 69 global clinical trials, primarily Phase 2 and 3, across multiple therapeutic areas. That may not seem like a lot, but I also recognize this is only one dataset, and PACT is still pretty new. I’m guessing as time goes on — and sponsors get better at tracking DCT elements in trials — this kind of research will be more representative of a broader swath of the industry.

I’m well aware everyone sees different key takeaways when looking at a research report, and as such, you may not agree with mine. That’s cool with me; I’ve never claimed to be an expert. With that being said, here are my non-AI-assisted opinions of this research.

- I wasn’t surprised that electronic outcome assessments (EOAs) were the most used DCT solutions supporting study visits, representing nearly 73% of clinical trials. I was surprised that I had never heard of an “EOA” (are you seeing a pattern here with me?). According to the report, an EOA is:

Data from outcome assessments is collected digitally using a smart phone, computer, or mobile device (e.g. eCOA, ePRO, electronic observer-reported, electronic performance outcomes, electronic patient diaries). To be considered a DCT solution, it must be possible for participants to complete the EOA outside of the trial site. EOAs completed during regular study visits do not qualify.

One thing that confused me was the categorization of e-diaries or even ePROs as DCT “solutions.” I guess I always considered those as basic elements of most trials, not just ones we deem a DCT. But I guess that’s part of the challenge of doing this kind of research — you’re trying to define the elements not the trials themselves. I know, “tomayto,” “tomahto;” it’s confusing.

- There was one metric — 20.9% — that really had me scratching my head. It was in a chart comparing patient demographic distributions between trials that used and did not use DCT solutions. It referred to the proportion of Asian participants who were part of a trial that used some DCT solution. That was compared to 14.2% for non-DCT trials. That was way more of a difference than any of the other racial identity categories (American Indian or Alaska Native, Native Hawaiian or Pacific Islander, and black). Why are there soooo many more Asian participants in these DCT-related trials?

- The chart comparing enrollment performance between trials using and not using DCT solutions had some really impressive bullet points. My favorite: “The average number of participants enrolled per site was 160% higher for clinical trials supported by DCT solutions, compared to traditional clinical trials.”

Remember, PACT is still relatively new and a work in progress. Getz explained that Scimitar, a consulting and IT firm that has been collaborating with the PACT consortium since its founding, is currently helping the organization build a real-time data collection platform. He also said they will be releasing another report shortly based on DCT data provided by investigative sites. Now that I know what PACT is, I plan to stay in touch with Getz and Tufts about these Impact Reports, so watch for future articles referencing some of these analytics.